Hands-on-Reinforcement-Learning-with-PyTorch/5.3 DDPG Clipped Double Q Learning.ipynb at master · PacktPublishing/Hands-on-Reinforcement-Learning-with-PyTorch · GitHub

TD3: Learning To Run With AI. Learn to build one of the most powerful… | by Donal Byrne | Towards Data Science

![PDF] Learn to Move Through a Combination of Policy Gradient Algorithms: DDPG, D4PG, and TD3 | Semantic Scholar PDF] Learn to Move Through a Combination of Policy Gradient Algorithms: DDPG, D4PG, and TD3 | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/dfe0e29a2d766d8c8b72c990874f3ab998aa3d79/4-Table1-1.png)

PDF] Learn to Move Through a Combination of Policy Gradient Algorithms: DDPG, D4PG, and TD3 | Semantic Scholar

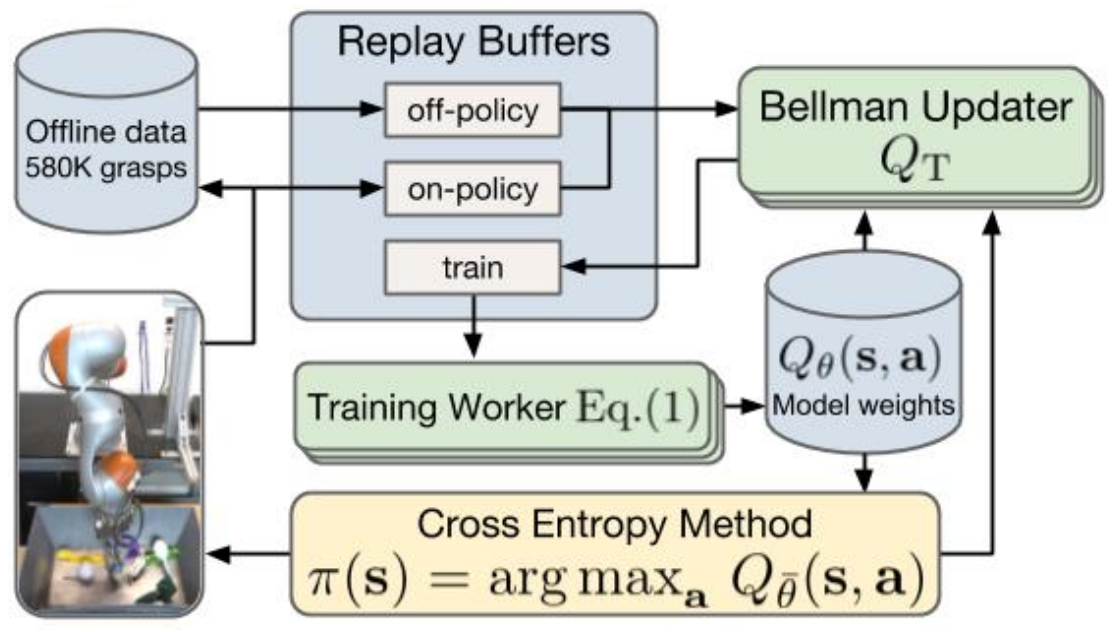

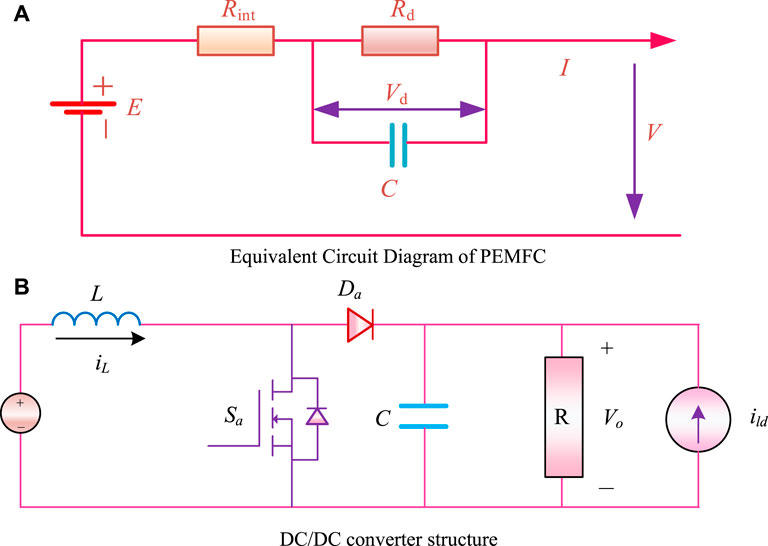

Frontiers | Distributed Imitation-Orientated Deep Reinforcement Learning Method for Optimal PEMFC Output Voltage Control | Energy Research

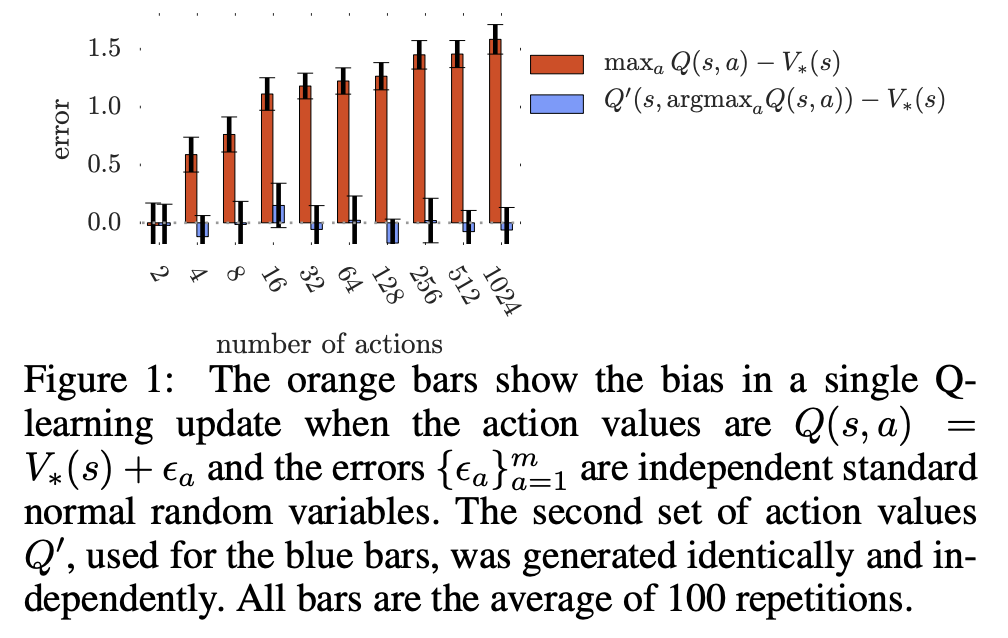

Comparison of Polyak averaging constants (a) and Single DQN vs Double... | Download Scientific Diagram

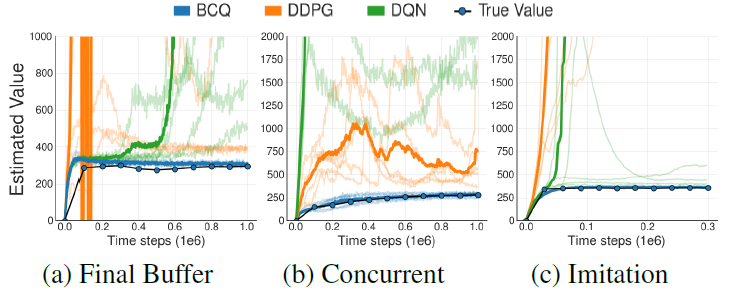

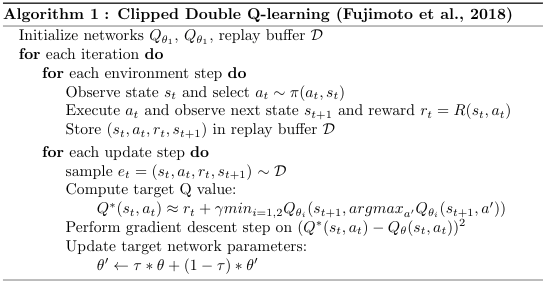

Ablation of Clipped Double Q-Learning (Fujimoto et al., 2018). We test... | Download Scientific Diagram